Virtualization: The Hyper-Efficiency of Software-Defined Datacenters

In this modern era of computing, no one should require convincing of the relative disadvantages of traditional infrastructure architectures. In a nutshell, they are old, outdated, unoptimized, inflexible, and are forcing you to pay for resources that you don’t use.

But there is an elegant solution that allows organizations to break free from their hardware restrictions: virtualization

In this article we explore some of the issues posed by traditional datacenter architecture and propose how virtualization can help organizations maximize their hardware ROI.

The Exponential Growth of Data

Early in the previous decade, human civilization entered the Zettabyte Zone: The era in our history when the total amount of digital data crossed the 1021 byte threshold. And the data is still growing. Global market intelligence firm, IDC, estimates the size of the “datasphere” of the world will reach 163 zettabytes in 2025, a tenfold growth from a still-staggering 16.1 zettabytes in 2016.

Organizations are now storing and processing more data than before, and the more data an organization has, the more access, control, and management it requires. This massive data sprawl is coupled with greater performance demand as users expect lower costs, faster responses, and more intuitive management.

Maintaining a hardware-driven datacenter that covers today’s needs is getting more expensive.

Costs of Hardware vs Needed Capacities

It’s true that infrastructure hardware, especially storage hardware, is becoming cheaper. But the storage needs of organizations are ballooning at astronomical rates. The cost required to increase capacity to match current needs often outstrips the savings reaped due to cheaper hardware. Maintaining a hardware-driven datacenter that covers today’s needs is getting more expensive.

Traditional Infrastructure’s Problematic ROI

At its core, traditional infrastructure is usually underutilized hardware. When hardware is discrete and workloads are isolated, there’s no chance of seamlessly balancing the latter across the former.

Organizations that maintain their capacities at the maximum projected levels—regardless of the frequency of needing or reaching that capacity; that is to say: a hardware-driven datacenter—constantly suffer from overprovisioning of hardware, increased datacenter footprint, increased complexity, a higher management load, and the burden of staffing adequate skilled resources to manage their IT.

Any part of an organization’s infrastructure still based on traditional architecture might require quick intervention to remedy this right away. The cost is just too great.

Infrastructure still based on traditional architecture might require quick intervention to remedy this right away. The cost is just too great.

Converged Infrastructure (CI): Better… but not Optimal

The converged infrastructure (CI) offered some recourse from the woes of traditional datacenters. Having the different infrastructure resources (compute, storage, database, and network) in a single rack allowed better communication between these components, as well as Integrated and optimized management. That is why many specialty vendors still employ this model in their products.

The catch was that it lacked operational & provisioning flexibility, since the components were still discrete and limited by their own individual capacities.

Converged infrastructure still suffered from many of the problems of traditional architecture.

The Virtualization Evolution: The Rise of the Software-Defined Data Center (SDDC)

The converged infrastructure (CI) offered some recourse from the woes of traditional datacenters. Having the different infrastructure resources (compute, storage, database, and network) in a single rack allowed better communication between these components, as well as Integrated and optimized management. That is why many specialty vendors still employ this model in their products.

The catch was that it lacked operational & provisioning flexibility, since the components were still discrete and limited by their own individual capacities.

Converged infrastructure still suffered from many of the problems of traditional architecture.

The Benefits of Virtualization & Virtual Machines (VMs)

There are many advantages to opting for virtualization. Some of the general benefits include:

- Industry-standard servers being much cheaper than purpose-built hardware meant that infrastructures could be built at much lower costs. This cut down the total cost of ownership (TCO) for IT systems.

- Since hardware resources are pooled and could be provisioned logically on an as-needed basis (and from a single interface), workloads could be balanced across a fewer number of machines as they are used more efficiently. This reduced the hardware and management burden of organizations’ IT systems.

- A lower hardware burden meant a decrease in the required supporting equipment for power provision and cooling.

- Unifying the management platform enabled higher automation for greater productivity.

- VMs are versatile. They can be deployed on-premises, in the cloud, or a mixture or concurrence of both.

- Since VMs are not anchored to hardware, higher availability can be achieved during downtime and maintenance of certain components by shunting VMs to live resources.

- Hardware resource deployment is automated, with little or no human involvement. It is controlled by software, and governed by policies and business requirements. It can be automatically provisioned on the fly as the organization needs it.

Virtualization provided a much more elegant solution to traditional datacenter architecture: hardware abstraction. This gave rise to the software-defined datacenter

Organizations not employing virtualization or using virtual machines might ultimately be paying for something they’re not using. Virtualization increases the ROI of datacenter hardware.

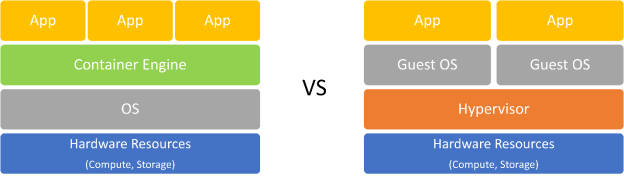

VMs vs Containers: It’s a Valid Question

Useful as it is, not all organizations need VMs. Sometimes, the lower level of virtualization and abstraction delivered by containers is the more suitable answer.

Each Virtualization Option Serves a Purpose

While containers might seem like a less potent option, they allow 2-3 times as many instances to run on a single server, compared to the number of possible VMs on that same server.

Containers only include the application to be run, as well as binaries, libraries, and any other dependencies for the app. This makes them much smaller and faster, allowing faster booting, easier delivery of the application, and a greater efficiency in using server resources.

This makes them suitable—for example—in web applications, DevOps testing, or microservices.

By contrast, VMs are larger and boot slower, but are more isolated from each other since each instance has its own OS kernel. This makes them suitable for legacy apps that need older OSs, or cases where complete logical isolation is needed between applications.

Organizations not employing virtualization might ultimately be paying for something they’re not using. Virtualization increases the ROI of datacenter hardware.

Starting with a Container but Growing to a VM

Given the similarities between containers and VMs, some organizations start off with the former and keep adding functionalities to it until they end up in a situation where they would’ve been better off with the latter. This “container sprawl” can be avoided if proper planning were conducted before sinking funds into acquiring either option. The choice requires a thorough analysis of the needs of the organization in order to arrive at the correct cost-benefit balance needed to make the decision and design the architecture.

Security Issues with Containers & VMs

Containers are less secure by nature. Since VMs (at least type I) run directly on the hardware, they don’t have as many vulnerable touchpoints for attackers. While hypervisors are not impenetrable, operating systems tend to be more vulnerable. A container on top of the OS layer suffers from all of the vulnerabilities of the OS. An attacker penetrating the underlying OS can access all of the containers on top of it.

As always, though, a knowledgeable, dedicated IT team can render containers more secure by isolating them from critical OS components.

This “container sprawl” can be avoided if proper planning were conducted before sinking funds into acquiring either option.

Often, it’s not even an either/or decision: it’s a matter of when to use each option. In a diverse enough organization, it’s possible that the best option is a mixture of both containers and VMs, each used in specific situations. It is even possible to use VMs with containers on top of them.

Once again, knowledge of the costs and benefits of each and the design of the architecture should be obtained from experienced consultants, dedicated to staying current on all the latest technologies and their ever-shifting updates, specs, and benefits.

To Recap…

We’ve presented the case against traditional datacenter architecture, and we’ve proposed a solution in virtualization.

However, as is usually the case in IT, there are no silver bullets.

Each organization is different: having different needs, operating in different environments, governed by different policies and regulations, and ultimately aiming for different objectives.

A seasoned consultant’s opinion is always the best way to identify whether a solution will ultimately help any specific organization, or whether it will continue to sink funds into dead ends that fail to deliver the required ROI.